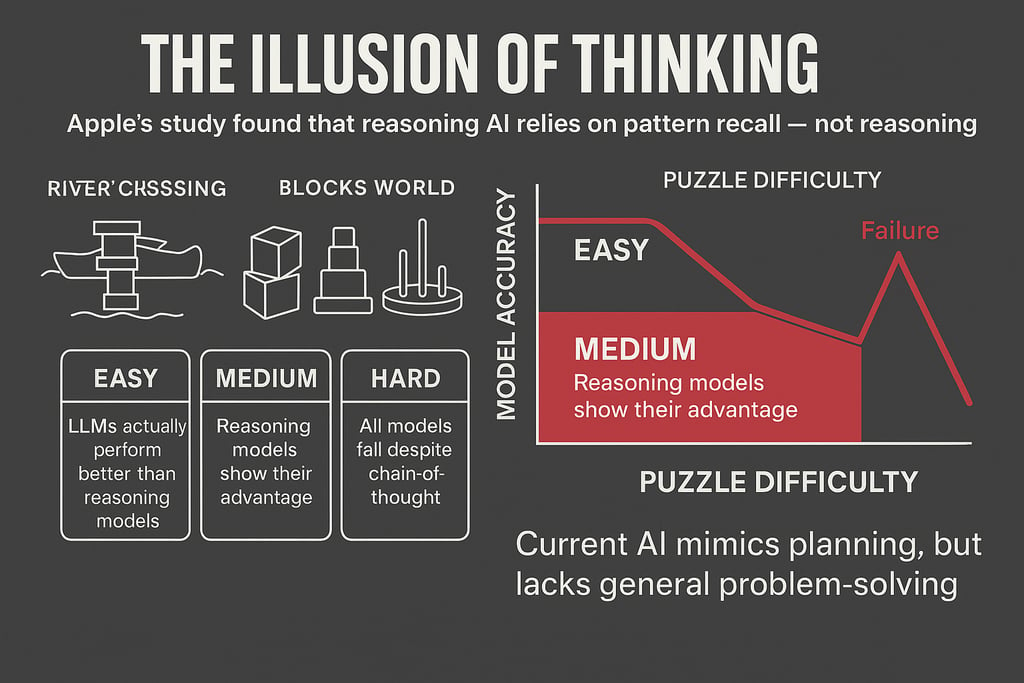

The Illusion of Thinking

Current AI doesn’t reason — it simulates reasoning. AGI — the point where machine thinking equals human thinking — remains an open frontier. Apple’s work reminds us to approach AI with both optimism and humility.

Mark Saymen

6/8/20251 min read

Apple Drops a Reality Bomb: Today's "Reasoning" AI Isn’t Really Reasoning

📢 Apple’s new paper, "The Illusion of Thinking", delivers a wake-up call: leading “reasoning” models like OpenAI’s o3‑mini, DeepSeek‑R1, Claude 3.7 Sonnet‑Thinking, and Gemini don’t actually reason — they merely excel at memorizing patterns and mimic thought processes.

🔍 Key Findings from Controlled Puzzle Tests:

Apple used classic logic puzzles (e.g., Tower of Hanoi, River Crossing, Blocks World), unseen during model training, to stress-test “reasoning” AIs.

💥 Accuracy Collapse

All models performed well on easy puzzles, struggled at medium difficulty, and hit 0% accuracy on hard puzzles, showing a sharp cliff in capability.🧠 Thinking Backfires

Rather than trying harder, models actually used fewer tokens — effectively giving up as puzzles got tougher.Three Performance Zones

Easy: Standard LLMs outperform “reasoning” models.

Medium: Reasoning models show some advantage.

Hard: All models fail, even with long chain-of-thought.

Algorithm Blindness

Even when provided the correct algorithm, models still collapsed at the same complexity level — no strategic execution happening.Inconsistency Across Tasks

A model could solve Tower of Hanoi with 100+ steps but fail on a simpler 5-step River Crossing — exposing fragile, dataset-based pattern matching.

🤯 Implications for AI and AGI

Reasoning = Pattern Recall, not general logic or planning.

Chain-of-thought prompts don’t guarantee deeper cognition — they may just allocate fluff tokens .

Benchmarks must evolve: puzzles with controllable complexity revealed limitations that math/coding tests may miss.

💡 What This Means for Practitioners & Builders:

Use LLMs for idea generation, drafting, or scalable computations, not for critical planning or novel problem-solving.

Treat chain-of-thought as a tool, not proof of cognition— under the hood, it's predictive mimicry

Human oversight remains essential — don’t mistake confident AI outputs for true understanding.

🏁 The Final Word:

Current AI doesn’t reason — it simulates reasoning. AGI — the point where machine thinking equals human thinking — remains an open frontier. Apple’s work reminds us to approach AI with both optimism and humility.

Would you like this adapted into an infographic, tweet storm, or animated explainer?

#AI #MachineLearning #ReasoningModels #LLM #AppleResearch #AGI #ChainOfThought #AIethics #PromptEngineering #FutureOfAI #AIalignment