Self Driving level 5

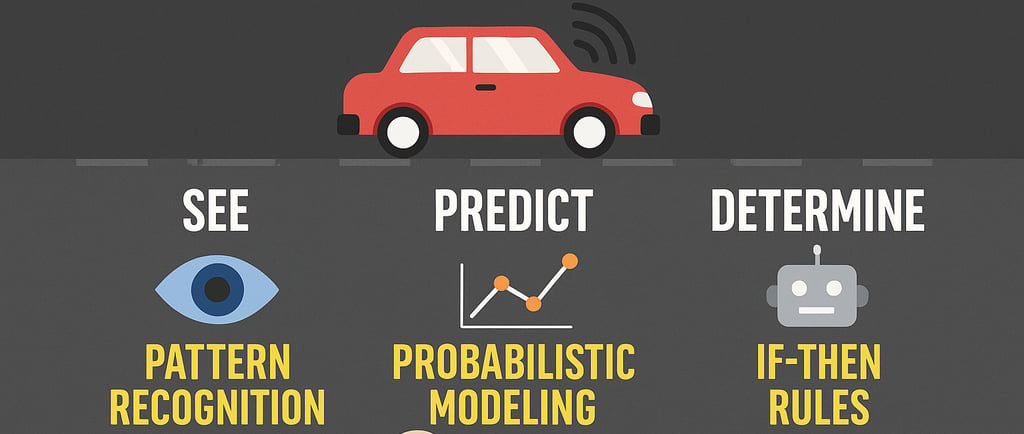

Self-driving cars don’t “reason” in the way humans do. They operate through a combination of pattern recognition, predictive modeling, and rule-based systems — not generalized logic. Here’s how it works, and why Apple’s research still applies

Mark Saymen

6/11/20251 min read

If current AI doesn’t reason logically… how does a self-driving car "drive"?

🔍 The Answer:

Self-driving cars don’t “reason” in the way humans do.

They operate through a combination of pattern recognition, predictive modeling, and rule-based systems — not generalized logic.

Here’s how it works, and why Apple’s research still applies:

🧠 1. Pattern Matching at Scale (NOT Reasoning)

Self-driving models (like Tesla’s FSD or Waymo) are trained on millions of hours of driving data.

They learn:

What a stop sign “looks like”

How to anticipate pedestrian motion

When other drivers slow down or swerve

This is pattern learning, not abstract reasoning.

⚙️ 2. Probabilistic Prediction, Not Deductive Logic

Cars don’t reason like:

“There’s a dog on the road, therefore I should stop.”

Instead, the model sees something shaped like a dog, assigns a probability, and triggers a learned action (e.g., braking).

It's all if-then weighted behavior driven by statistical models, not step-by-step logic.

🧩 3. Why Apple’s Findings Matter

Apple showed that when problems deviate from known patterns (like hard puzzles), reasoning AIs fail.

In driving terms:

What if the road is partially flooded, but the detour sign is wrongly placed?

What if a kid is chasing a ball into a blind turn, but the system hasn’t seen that scenario before?

In those unseen edge cases, the system’s limits emerge.

This is where humans reason, but today’s AI may guess — or fail.

⚠️ 4. This Is Why Full L5 Autonomy Is So Hard

True Level 5 autonomy (go anywhere, in any condition, with no human input) requires:

✅ Generalized reasoning

✅ Causal understanding

✅ Long-term planning

Today’s systems are stuck at narrow generalization, not human-like abstraction.

🚀 The Future?

We’ll need models that combine pattern recognition with reasoning systems — likely involving:

Hybrid AI architectures (neural + symbolic)

Causal inference

World modeling beyond language tokens

Until then, autonomy will advance — but slowly and with careful guardrails.